November 24, 2025

Will AI make us smarter or dumber? The insights of Klöpping, Scherder and Online Dialogue

Reflection on Klöpping × Scherder by Simon Buil (Data Analyst at Online Dialogue)

Kom bij ons werken. We zoeken een Data-analist! Bekijk de vacature

How do you build a successful experimentation culture inside and outside your organization? How do you use AI and unstructured data to generate really valuable insights? And how do you ensure that optimizations meet real user needs, including those of people with disabilities?

During Experimentation Elite 2025 eight speakers shared their vision and practical experiences around conversion optimization, UX, AI, personalization and digital accessibility. From BBC to Zalando, from behavioral psychology to experiment design, these talks offer valuable insights for anyone working on data-driven optimization.

For us, the event became extra special: Online Dialogue won no less than three awards!

🏆 E-commerce Campaign of the Year - with Travelbags.co.uk

📈 Media & Marketplaces Campaign of the Year - with Vattenfall

🛫 Travel & Hospitality Campaign of the Year - with Bidfood Netherlands

In this blog you will find key lessons from talks by Ruben de Boer, Juliana Jackson, Desiree van der Horst and Vignesh Lokanathan, among others.

In his talk, Ruben de Boer (Lead Experimentation Consultant at Online Dialogue) showed that many specialists in conversion optimization and data-driven work are very good at improving websites, but often do not apply the same approach within their own organization. While that is precisely where huge gains can be made.

Ruben shared how he found out in 2015 that a culture of experimentation does not come naturally. His attempts to get colleagues excited through updates, presentations and challenges did not achieve the desired results. The reason? He was not yet applying the experiment mindset to his approach. He did not research what colleagues needed or how they viewed experimentation. This changed later.

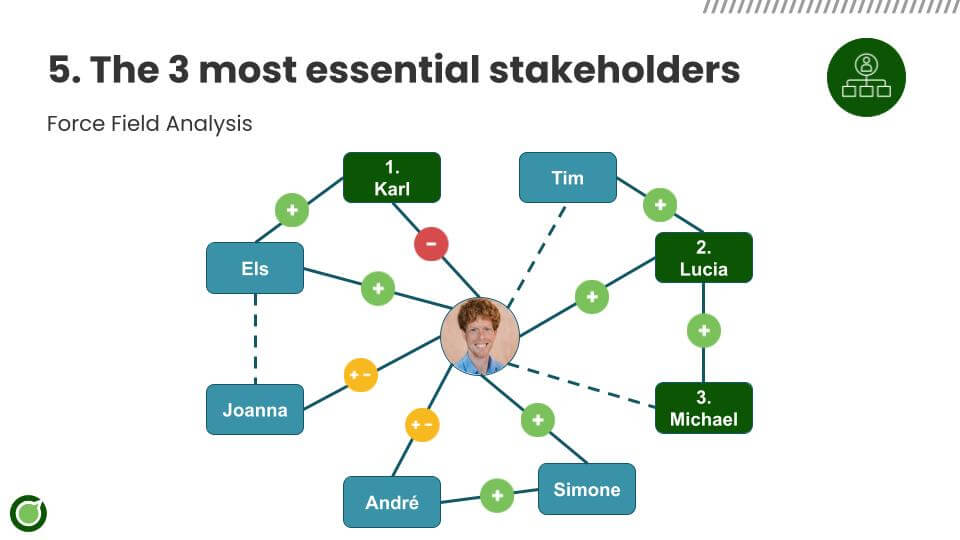

1. Map stakeholders: Use the organizational chart as well as informal networks.

Determine who is for, against or neutral to experimentation and tailor your approach to their role and influence.

2. Conduct ‘colleague research. Talk to colleagues as you would to users. Ask about their goals, concerns and obstacles. That's how you find starting points for collaboration.

3. Avoid the ‘frustration loop. Only with data or external examples (such as “Netflix does this too”) will not convince anyone. Show interest in how colleagues work and help them achieve their goals.

Juliana called for a fundamental overhaul of how we analyze customer behavior. Tools like heuristic analysis, funnel analysis and surveys focus only on the last piece of the journey, which is the website. As a result, we miss the broader context that takes place beyond the website. For example, on social media, in searches or through word of mouth. Companies collect a huge amount of information, but mainly focus on metrics at the end of the funnel, such as last-click conversions.

Her solution lies in leveraging unstructured data (such as reviews, searches, videos) that provide much better insight into emotion, intent and behavior. By applying techniques such as Natural Language Processing (NLP) and machine learning, these data sources can be structured and analyzed. For example, you can discover user problems through app reviews, or unravel people's real questions and information needs through searches.

We need to align content with user intent. Instead of writing for algorithms, brands should focus on helpful, human-centric content. Combining insights from unstructured sources with behavioral data creates stronger hypotheses and better user experiences.

In short: abandon old-fashioned CRO methods, use data science to gain deeper insights and build optimizations on real user needs.

Desiree van der Horst (CRO Manager at Loop Earplugs) shared how she used structure and research to keep a grip on a tricky homepage design process. With a complex product offering and a primarily mobile audience that often makes one-time purchases, a clear, compelling first impression is crucial. The challenge: How do you design a homepage that is effective for the user and fits within the broader company ambitions?

To answer that question, Desiree used the 6V model: a framework that brings together insights from six perspectives:

All this input was collected in a central Figma file. Based on this, seventeen A/B tests followed with different versions of the homepage. Some focused on the type of image or text above the fold, others on the role of elements such as sliders. The outcome was surprising: a version with slider ultimately performed best, when combined with clear call-to-actions at the top.

An inspiring example of how research and experimentation help inform choices - even when the outcome is different than you expect.

In his talk, Vignesh Lokanathan (Experimentation Lead at the BBC) brought a powerful message about accessibility. With personal examples and practical insights, he showed that many barriers, both physical and digital, become visible only when you encounter them yourself or through someone else. He calls such obstacles “invisible steps”: barriers that are invisible to some, but all-important to others.

An experience at a language school, where a subtle barrier made access impossible for his son in a wheelchair, opened his eyes. At first, everything seemed settled, until reality proved otherwise. Later, during the lockdown, a language app actually offered new opportunities, until digital barriers arose there as well. Because of his motor disability, his son had to use his knuckles to navigate the app. What seemed accessible at first became increasingly frustrating. This led to a broader question: why do such barriers still exist in the digital world?

According to Vignesh, accessibility is not just about people with disabilities. Good accessibility means better user experiences for all users: the elderly, people with temporary disabilities, or people in a situation where their capabilities are temporarily limited. Think of bright sunlight making contrast more important, a broken arm making it necessary to navigate with one hand, or a busy environment where you can't hear or focus as well.

In short: good digital accessibility opens the door to millions of potential customers.

Three reasons to get started:

An accessible experience must:

Use the WCAG guidelines as a guide and start with your customers. Understand their obstacles and test whether what you create really works for everyone.

Vignesh's core message: don't just be aware of accessibility, but actively act on it. With every product or adaptation, ask yourself: is there an ‘invisible barrier’ we can remove? Who are we still inadvertently excluding? And what can we do about it?

Annette Rowson shared how she built a culture of experimentation at Primark within a traditionally store-driven organization. Following the COVID pandemic, Primark launched a digital transformation in 2022, including a revamped website, stock visibility and Click & Collect. Rowson was tasked with building an experimentation program from scratch, despite a culture of ‘just doing it,’ without data-driven underpinnings or knowledge of A/B testing.

The challenges were great: no suitable tools, hardly any testing capacity, little developer support, and product teams making decisions on gut feeling. In 2023, tools such as Dynamic Yield and Contentsquare were introduced and CRO specialists were placed in squads. It also started activities to spread the thinking, such as ‘Data Champions’ and the ‘Data & Insight Surgery. All these led to increased visibility and engagement.

But by 2024, new bottlenecks emerged: many tests proved meaningless or poorly substantiated. Stakeholders bypassed the testing process, and qualitative and quantitative teams worked alongside each other. As a solution, analytics and CRO were merged within squads and KPIs were jointly defined. An experiment request form was also introduced to enforce focus and priority.

The focus shifted from quick successes to impactful experiments. Building trust proved crucial: product teams got involved and learned to work iteratively, even when tests failed. Failure was celebrated as a learning opportunity. The success of the Click & Collect trial underscores the potential of this approach.

Key lessons: be patient, agree on common KPIs, work closely with product/UX teams, start with quick wins for support, and embrace failure as part of growth.

Nils Stotz of Zalando showed how AI can not only accelerate experimentation, but fundamentally change it in some areas. No hype, just concrete: where is value already present, and where are the opportunities for the future?

Nils mentioned some examples where AI is already helping in the experimentation process:

While many challenges were identified regarding general AI issues, such as quality, reliability and hallucinations, Nils also expressed a specific concern: the over-reliance on AI.

In an interview, a machine learning engineer highlighted the fear of losing satisfaction if AI dictates daily tasks. This raises the question of the potential impact of AI on motivation in high-skill positions such as product management or engineering.

In many companies, the bottleneck is not in the process, but in the lack of traffic. And that's where AI gets really interesting. Think “silicon participants” or digital twins that can mimic human traffic.

-

Small addition of Ruben: I hear such statements more and more often and I find them quite optimistic. For now, I agree with Els Aerts: you can use synthetic users as an addition to your exploratory research, but it is not a replacement of your real users, and thus is not going to find the right solution for you.

Slide by Els Aerts at the GMS25 conference.

Finally, Nils shared some great practical tips:

Katie Dove her presentation was about effective personalization. Companies make three common mistakes that undermine the psychological value of personalization: they don't make people feel seen, aren't transparent or engage users, and don't make the customization delivered visible. By using insights from behavioral science, you can make personalization feel like a genuine, personal effort. Similar to a thoughtful gift that shows someone knows you.

The first mistake is the lack of recognition: people want to feel ‘seen. A personal recommendation only works if it explicitly refers to previous interactions or choices (so-called ’callbacks“). For example: Netflix says ”because you watched Grey's Anatomy,“ which is more effective than an anonymous recommendation. Such callbacks reinforce the sense of relationship and self-recognition.

The second mistake is opacity. When personalization is based on silent data collection, it feels uncomfortable or even creepy. An example of this is how Target once started giving baby product suggestions based on previous buying behavior. This was perceived as ‘creepy’ because Target did not involve the user in the process or show them how they were using the data. Instead of trying to make everything frictionless, Dove actually recommends asking questions. That increases engagement, increases acceptance of recommendations and increases the sense of control. Users want to know what you know and why.

The third mistake is that personalization is often not perceived as such. Users simply do not notice the customization. So companies need to make the work done explicit. Show what steps have been taken. A recent example of this is how GenAI tools explicitly name their thought process. This increases confidence in the outcome.

Key lessons:

Good personalization feels not only smart, but more importantly human.

David Mannheim put his talk down with a clear message: organizations are shaped by what they measure. And if you measure the wrong things, or use them incorrectly as targets, then you get behavior that is optimized exactly for that, but that is often not in line with your real goal or the user's experience.

When organizations choose the wrong KPIs, we unintentionally steer toward the wrong outcomes. Think short-term focus, metrics that are easily manipulated, and the creation of perverse incentives.

Among other things, David cites the Cobra effect: in India, people received a reward for each cobra killed. Consequence? People started breeding cobras only to turn them in again.

David therefore advocates a fundamental shift within CRO. Not optimize on pages (home, PLP, PDP) or conversion rates per page, but on the stage a user is in.

And within those phases look not just at clicks or scrolls, but at the set of behavioral signals, the digital “body language” of a user.

Case in point: a discount popup is not wrong, but only relevant if you show it at the right time. With someone who is seriously considering it, not with someone who is just coming in.

Experimentation Elite 2025 showed convincingly that experimentation goes far beyond optimizing conversions. It's about building a culture where you dare to experiment, learn from mistakes and put the human behind the data at the center.

Digital products are intertwined with our daily lives. Successful experimentation requires collaboration across disciplines, from UX and development to data and strategy, to create digital experiences that are intuitive, accessible and compelling. Exactly as we do with our clients at Online Dialogue.

Whether you're experimenting with AI, working on accessible UX, building hypotheses from NLP or getting your own team into a testing mindset: it's all about listening, learning and adjusting.

Want to continue building your own culture of experimentation? Get inspired by these practice stories.

🔍 Want to learn more about data-driven working, CRO, AI and accessible UX? Subscribe to our newsletter or follow Online Dialogue on LinkedIn.